I’d like to share some of my experiences with Bitcoin “mining” for those who have asked about it. The two main sites that can give you a run-down on Bitcoins are the Wikipedia article on the Bitcoin Protocol and the main Bitcoin Wiki site. I’d like to give some feedback on my personal experiences as I tried to generate my own Bitcoins.

Unless you are given Bitcoins (also referred to as “BTC”) in exchange for goods and/or services, you have to attempt to be the first person to figure out a value that, when hashed against the latest value spit out by the Bitcoin powers that be, result in a number with a pre-determined number of leading zeroes in the answer. The more zeroes that the system requires as proof of doing the work, the harder (and longer timeframe) that the problem will take. The system is configured to only release BTC into the wild at a fairly constant rate. To slow things down when the answers are being calculated too rapidly, the system will require an increasing number of leading zeroes in the hash result.

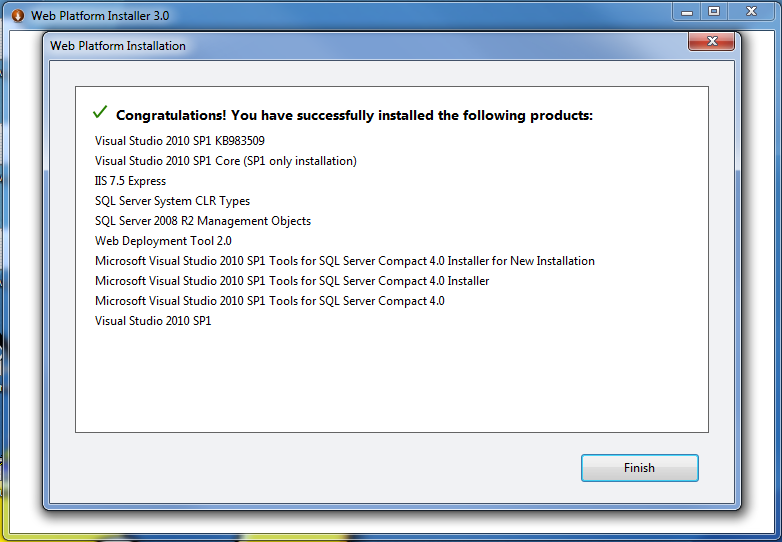

In the early days, the hashing operations were done using the CPU of a users computer, so the field was relatively level. Pretty much anybody could download the Bitcoin-Qt software, set the “server=1” and “gen=1” option in the “bitcoin.conf” file and away they’d go. Well, after they downloaded the entire blockchain and it was verified (which can take a couple of days when you’re just getting started). The CPU of your computer would be worked pretty hard, so it wasn’t much use for too much else. The people with the higher-end CPUs had an advantage over those with less capable CPUs, but the average person had a chance to at least compete.

The field changed a bit when some programmers realized that they could leverage the plethora of GPU cores (graphical processing units) that were contained in many higher end graphics cards. The nVidia cards did okay, getting in the 10’s of MH/s (MegaHashes/second), which was much faster than the standard CPUs could perform the hashing operations. The AMD graphics cards did much better – with my Radeon HD 7970 cards racking up about 580MH/s each. In order to leverage the GPUs, however, you had to forgo use of the Bitcoin-Qt software as a mechanism for calculating the hashes and only use it as your Bitcoin as a wallet to store your BTC balance as well as a means to send and receive payments to other Bitcoin users.

In the spring of 2013, I tinkered with BTC mining using my existing nVidia graphics cards. After weeks of cranking away at ~ 60MH/s (million hashes per second) I decided that I needed to build a new server for the house. I took a look at the Mining Hardware Comparison tables and it turned out that the AMD Radeon line of GPUs were much better at mining operations than the nVidia cards. I believe the Radeons had more cores and that they had a single instruction for performing the hashing operation, which made them much more suitable for BTC mining.

In doing my research, I found that some enthusiasts were plugging multiple graphics cards into a single case and running them all in parallel to increase their likelihood of guessing the correct hashing value. I decided that my new server had to be able to handle at least (3) full length PCI-e graphics cards. Since most high-end cards took two slots due to the fans tacked onto the side, PCI-e slots needed to be staggered to every other slot so that I could fit the (3) cards into the motherboard. The other problem is that the power requirements to run each graphics card at full-speed was pretty substantial. Some enthusiasts tried to get away with a 500 watt power supply, only to have it (and/or the graphics card) get fried in the process. This meant that I would need to size the power supply accordingly.

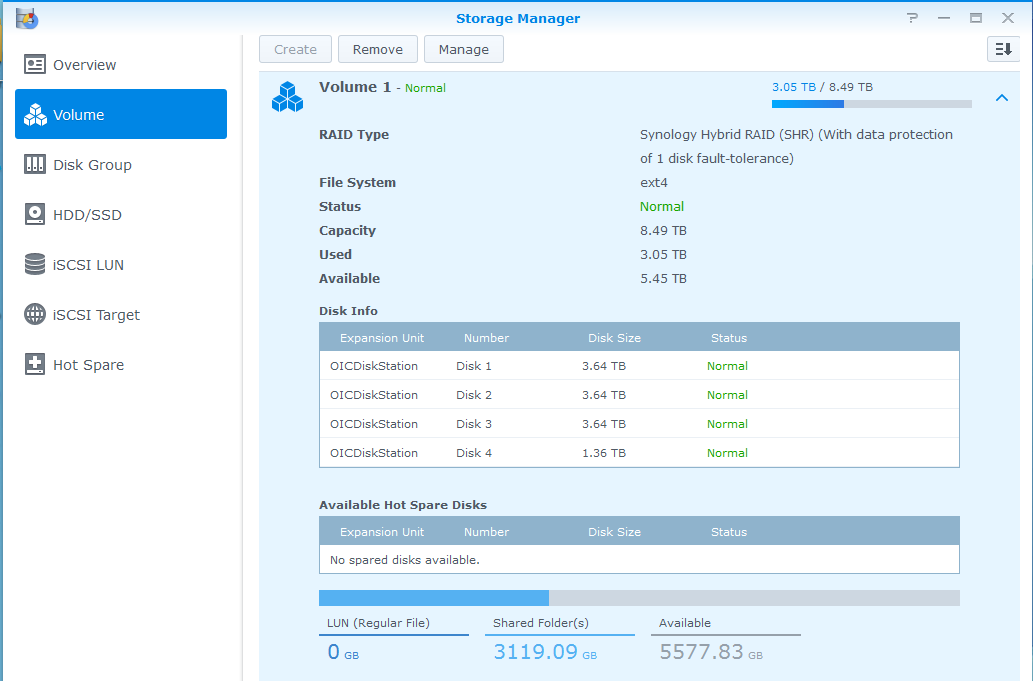

The mining workstation that I ended up with had the following hardware:

- Gigabyte AMD Radeon HD 7970 Graphics Cards (3)

- Corsair AX 1200 Watt Power Supply

- Corsair 16GB Vengeance DDR3 1600MHz memory

- GIGABYTE GA-990FXA-UD5 Motherboard (AMD processor slot)

- AMD FX-8350 FX-Series Eight-Core Processor Edition, Black AM3

- Corsair Graphite Series Black 600T Mid-Tower Computer Case (CC600TM)

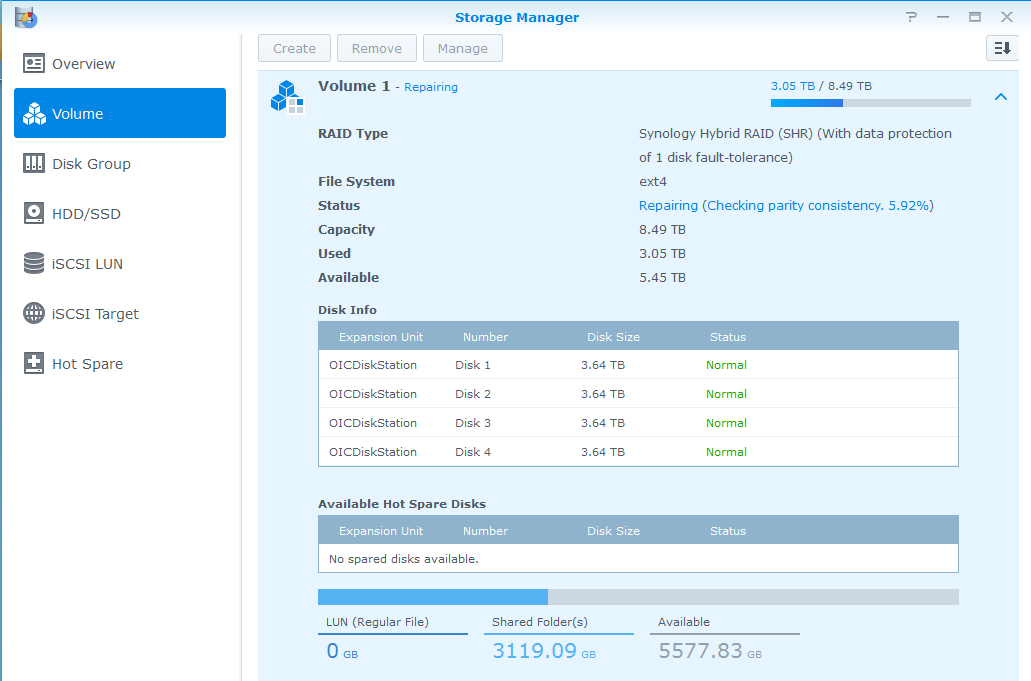

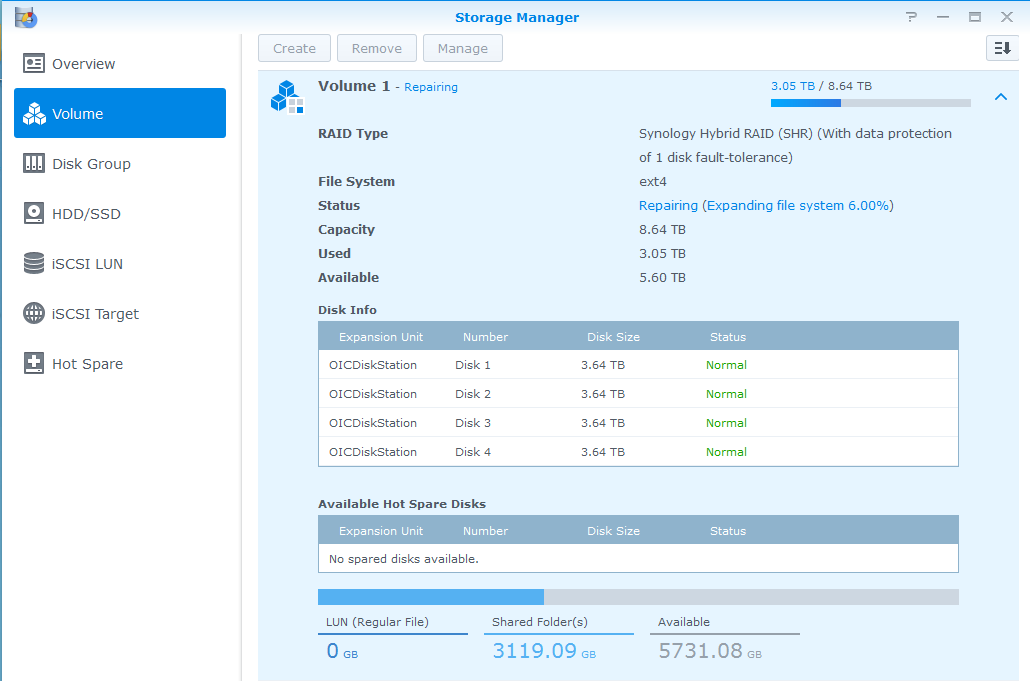

The good news was that when I initially fired up the workstation and ran the CGMiner software to start hashing with the GPUs I was cranking out ~1.6GH/s (1.6 giga hashes per second). The bad news is that two of the GPUs heated up pretty rapidly since their fan intakes were adjacent to the graphics cards printed circuit card of the card next to them. The software throttled back their workload to try to keep the GPUs at a safe temperature, which meant that the sustained hashing rate ramped back down to ~1.1GH/s. If I removed the middle graphics card, the airflow was restored for both of the remaining graphics cards and they were capable of doing ~1.2GH/s with just the two cards. It didn’t make sense to keep all three in the unit, so I took the 3rd graphics card and put it into a separate PC that could only handle one full-length PCIe card. With both systems mining, I got ~1.6GH/s, which was pretty good at the time.

GPU mining rig with the middle graphic card removed

I ran the two systems for several weeks, hoping to strike pay-dirt and amass my fortune in Bitcoinage. I was running them during the early spring when it was pretty cool out, so the heat that they added to the home office was welcome. I even moved one of the mining workstations down into our downstairs bathroom to keep that warm. Alas, after several weeks, I had not struck gold. Not a single Bitcoin was mined.

More research showed that the odds were pretty stacked against me. Too many people had too much hashing power in use, and the likelihood of me beating them to the answer was pretty slim. The fallback was to engage in a Mining Pool. This is where you and several others join forces and all contribute your hashing efforts to a Pool operator. The odds were much better that the combined might of the Pool would come up with the answer and collect the BTCs. The pool operator would keep track of the number of hashes that each member had contributed towards finding the correct answer and would divide the BTCs between the participants. My efforts yielded about 0.1 BTC every week or so, which ever so slowly built up. Every once in a while I would try solo mining and not contribute my efforts to the pool, but I’d always end up going back to working with the pool. Hey, 0.1 BTC in the hand was much better than 0 BTC in the bush ;?)

When the weather started warming up, I had to shut down my mining operations. The heat that the GPUs were cranking out were making the office uncomfortably warm. The WAF (wife acceptance factor) also sunk to an all-time low when the noticeably higher electric bills started rolling in. With the weather warming up, that would be more pronounced as I’d have to run the A/C to remove the excess heat as well. So my mining efforts ceased over the summer.

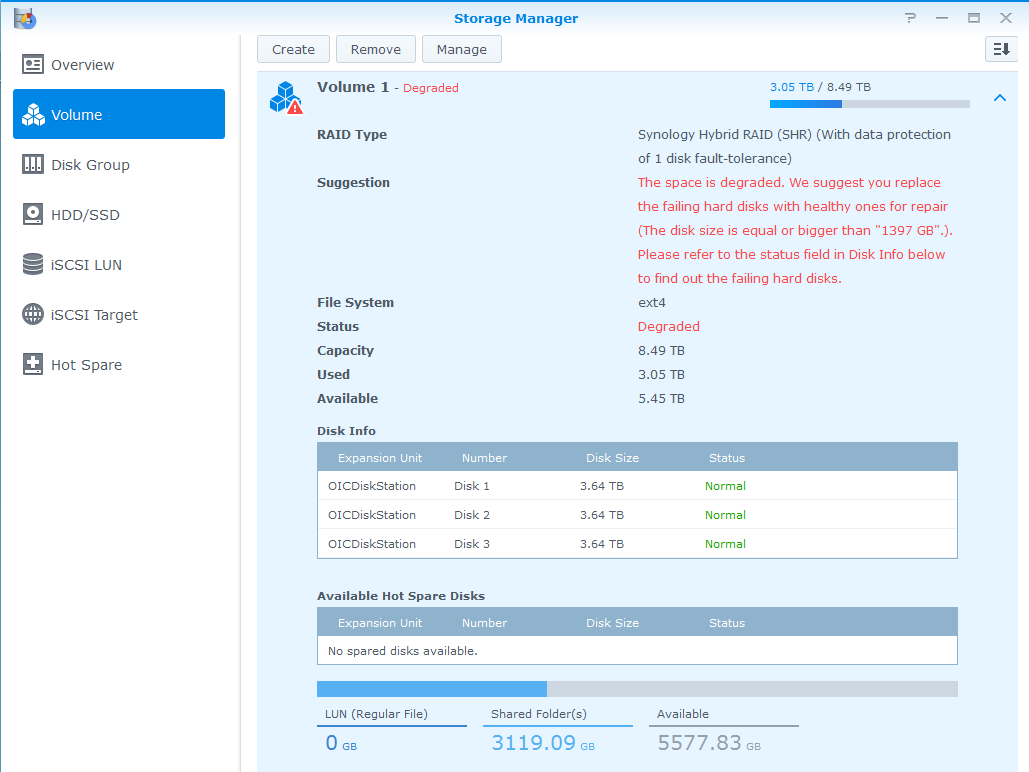

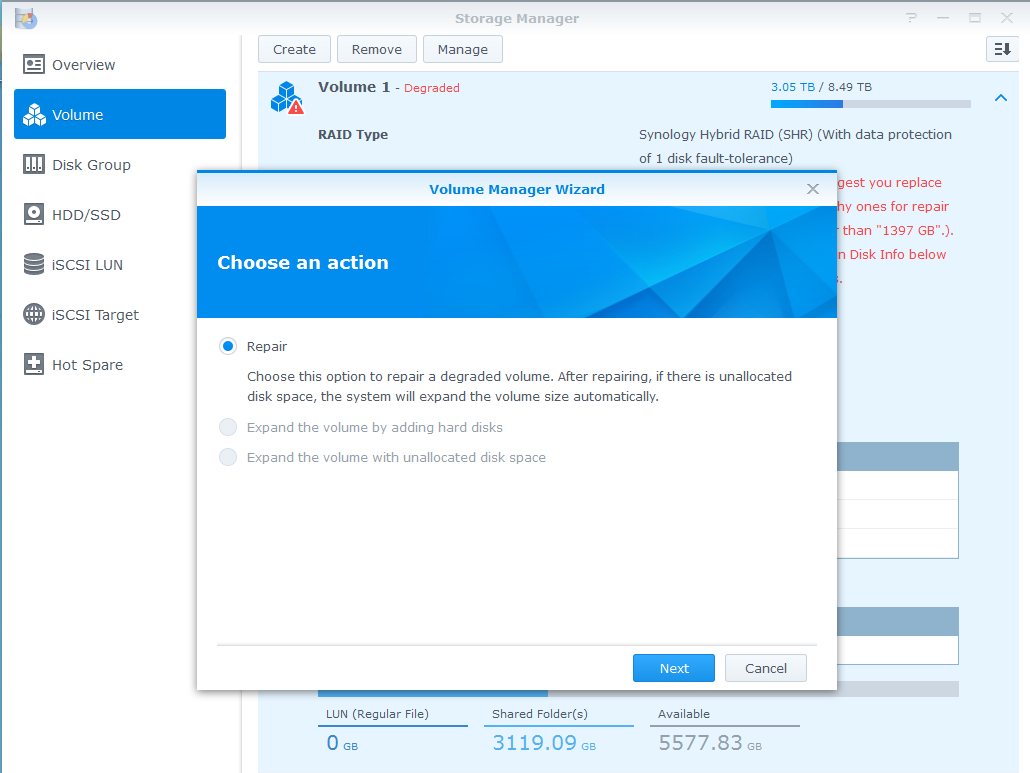

I tried firing the units back up this winter as the added heat was a bonus, but the GPU mining capacity was just not comparable to the new FPGAs (Field Programmable Gate Arrays), which were just as fast, if not faster, than the GPU hashing capabilities. The newest big boy on the block are the ASIC mining rigs – which leverage custom Application Specific Integrated Circuits which are engineered solely for the purpose of performing hashing operations. ASIC mining rigs from outfits like Butterfly Labs can crank out from 5GH/s all the way up to 250GH/s and use a tiny fraction of the power that the GPUs required. In response to this, after version 3.7.2 of CGMiner, the author opted to remove GPU support from the mining software as it was not deemed worth maintaining support for them since they were so far behind the ASIC mining rigs. My meager GPU rig would only yield 0.1BTC after a couple of months of mining. The ante had been raised.

There are a second generation of ASIC mining rigs coming out of Butterfly Labs based on 28nm chip technologies that are supposed to crank out from 300-600GH/s per unit. I’d LOVE to be able to get ahold of some of these new ASIC rigs, but right now they’re out of my league price-wise. Especially since they don’t take credit cards to purchase a rig, only ACH transfers or Bitcoins are accepted for payment for their rigs. I had amassed enough BTC to purchase a single 25GH/s Butterfly Labs rig, but it is only able to pull in about 0.1BTC every week or so. The heat output is much more manageable, but the fan noise is substantial and the buy-in is pretty steep.

Unfortunately I bought my 25GH/s mining box a tad too soon. Butterfly Labs just dropped their 50Gh/s unit prices down below what I paid for the 25GH/s unit. The 300GH/s and 600GH/s units are not yet available for sale either. They are on a pre-Order status as of the time that I’m writing this. Once they hit the streets, the ante will be raised once again, with the payout from the various mining pools falling even further. And, of course, the difficulty level of the Bitcoin mining tasks will adjust even higher to compensate for the increased hashing power that’s being brought into the mix.

The good news is that I now have an awesome gaming workstation for my son and I to use with in our PC gaming adventures. That was always my goal for the rig once my experimentation with GPU mining was finished. I also have my Butterfly Labs 25GH/s ASIC mining rig that I obtained with the almost 3 BTC that I managed to mine over the many months of experimentation. I’m now down to 0.3 BTC and I’ll see how far my pooled mining efforts will take me over the winter. I’d love to be able to build up enough to get one of the 600GH/s rigs from Butterfly Labs when they finally launch (sometime in Feb 2014 from what I hear). I refuse to pay my hard-earned cash for a mining rig, only to see the bottom suddenly drop out of the Bitcoin valuation – it’s looking quite bubblish at the moment, but I don’t mind spending virtual currency on them while they’re still useful for something. I also have a spare Radeon HD 7970 graphics card if anybody wants to barter for it.

If you’ve got some Bitcoins that you’d like to donate towards my research and experimentation efforts – feel free to send them my way – I’ll put them to good use ;?)

Donations gratefully accepted at: 1CdbFRVEXJ8Y55B1LATpSmMegKmmy5zvoe

Follow me on Twitter @cpuguru